Review: If  is normal with mean

is normal with mean  and standard deviation

and standard deviation  , then

, then

is the Standard Normal Distribution with mean 0 and standard deviation 1. To find the probability  , you would convert

, you would convert  to the standard normal distribution and look up the values in the standard normal table.

to the standard normal distribution and look up the values in the standard normal table.

If  is a weighted sum of

is a weighted sum of  normal random variables

normal random variables  , with means

, with means  , variance

, variance  , and weights

, and weights  , then

, then

![\displaystyle E\left[\sum_{i=1}^n w_iX_i\right] = \sum_{i=1}^n w_i\mu_i](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+E%5Cleft%5B%5Csum_%7Bi%3D1%7D%5En+w_iX_i%5Cright%5D+%3D+%5Csum_%7Bi%3D1%7D%5En+w_i%5Cmu_i&bg=ffffff&fg=333333&s=0&c=20201002)

and variance

where  is the covariance between

is the covariance between  and

and  . Note when

. Note when  ,

,  .

.

Remember: A sum of random variables is not the same as a mixture distribution! The expected value is the same, but the variance is not. A sum of normal random variables is also normal. So  is normal with the above mean and variance.

is normal with the above mean and variance.

Actuary Speak: This is called a stable distribution. The sum of random variables from the same distribution family produces a random variable that is also from the same distribution family.

The fun stuff:

If  is normal, then

is normal, then  is lognormal. If

is lognormal. If  has mean

has mean  and standard deviation

and standard deviation  , then

, then

![\begin{array}{rll} \displaystyle E\left[Y\right] &=& E\left[e^X\right] \\ \\ \displaystyle &=& e^{\mu + \frac{1}{2}\sigma^2} \\ \\ Var\left(e^X\right) &=& e^{2\mu + \sigma^2}\left(e^{\sigma^2} - 1\right)\end{array}](https://s0.wp.com/latex.php?latex=%5Cbegin%7Barray%7D%7Brll%7D+%5Cdisplaystyle+E%5Cleft%5BY%5Cright%5D+%26%3D%26+E%5Cleft%5Be%5EX%5Cright%5D+%5C%5C+%5C%5C+%5Cdisplaystyle+%26%3D%26+e%5E%7B%5Cmu+%2B+%5Cfrac%7B1%7D%7B2%7D%5Csigma%5E2%7D+%5C%5C+%5C%5C+Var%5Cleft%28e%5EX%5Cright%29+%26%3D%26+e%5E%7B2%5Cmu+%2B+%5Csigma%5E2%7D%5Cleft%28e%5E%7B%5Csigma%5E2%7D+-+1%5Cright%29%5Cend%7Barray%7D&bg=ffffff&fg=333333&s=0&c=20201002)

Recall  where

where  is the future value of an investment growing at a continuously compounded rate of

is the future value of an investment growing at a continuously compounded rate of  for one period. If the rate of growth is a normal distributed random variable, then the future value is lognormal. The Black-Scholes model for option prices assumes stocks appreciate at a continuously compounded rate that is normally distributed.

for one period. If the rate of growth is a normal distributed random variable, then the future value is lognormal. The Black-Scholes model for option prices assumes stocks appreciate at a continuously compounded rate that is normally distributed.

where  is the stock price at time

is the stock price at time  ,

,  is the current price, and

is the current price, and  is the random variable for the rate of return from time 0 to t. Now consider the situation where

is the random variable for the rate of return from time 0 to t. Now consider the situation where  is the sum of iid normal random variables

is the sum of iid normal random variables  each having mean

each having mean  and variance

and variance  . Then

. Then

![\begin{array}{rll} E\left[R(0,t)\right] &=& n\mu_h \\ Var\left(R(0,t)\right) &=& n\sigma_h^2 \end{array}](https://s0.wp.com/latex.php?latex=%5Cbegin%7Barray%7D%7Brll%7D+E%5Cleft%5BR%280%2Ct%29%5Cright%5D+%26%3D%26+n%5Cmu_h+%5C%5C+Var%5Cleft%28R%280%2Ct%29%5Cright%29+%26%3D%26+n%5Csigma_h%5E2+%5Cend%7Barray%7D&bg=ffffff&fg=333333&s=0&c=20201002)

If  represents 1 year, this says that the expected return in 10 years is 10 times the one year return and the standard deviation is

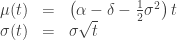

represents 1 year, this says that the expected return in 10 years is 10 times the one year return and the standard deviation is  times the annual standard deviation. This allows us to formulate a function for the mean and standard deviation with respect to time. Suppose we write

times the annual standard deviation. This allows us to formulate a function for the mean and standard deviation with respect to time. Suppose we write

where  is the growth factor and

is the growth factor and  is the continuous rate of dividend payout. Since all normal random variables are transformations of the standard normal, we can write

is the continuous rate of dividend payout. Since all normal random variables are transformations of the standard normal, we can write  . The model for the stock price becomes

. The model for the stock price becomes

In this model, the expected value of the stock price at time  is

is

![E\left[S_t\right] = S_0e^{(\alpha - \delta)t}](https://s0.wp.com/latex.php?latex=E%5Cleft%5BS_t%5Cright%5D+%3D+S_0e%5E%7B%28%5Calpha+-+%5Cdelta%29t%7D&bg=ffffff&fg=333333&s=0&c=20201002)

Actuary Speak: The standard deviation  of the return rate is called the volatility of the stock. This term comes from expressing the rate of return as an Ito process.

of the return rate is called the volatility of the stock. This term comes from expressing the rate of return as an Ito process.  is called the drift term and

is called the drift term and  is called the volatility term.

is called the volatility term.

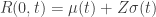

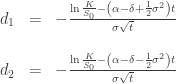

Confidence intervals: To find the range of stock prices that corresponds to a particular confidence interval, we need only look at the confidence interval on the standard normal distribution then translate that interval into stock prices using the equation for  .

.

Example: For example ![z=[-1.96, 1.96]](https://s0.wp.com/latex.php?latex=z%3D%5B-1.96%2C+1.96%5D&bg=ffffff&fg=333333&s=0&c=20201002) represents the 95% confidence interval in the standard normal

represents the 95% confidence interval in the standard normal  . Suppose

. Suppose  ,

,  ,

,  ,

,  , and

, and  . Then the 95% confidence interval for

. Then the 95% confidence interval for  is

is

![\left[40e^{(0.15-0.01-\frac{1}{2}0.3^2)\frac{1}{3} + (-1.96)0.3\sqrt{\frac{1}{3}}},40e^{(0.15-0.01-\frac{1}{2}0.3^2)\frac{1}{3} + (1.96)0.3\sqrt{\frac{1}{3}}}\right]](https://s0.wp.com/latex.php?latex=%5Cleft%5B40e%5E%7B%280.15-0.01-%5Cfrac%7B1%7D%7B2%7D0.3%5E2%29%5Cfrac%7B1%7D%7B3%7D+%2B+%28-1.96%290.3%5Csqrt%7B%5Cfrac%7B1%7D%7B3%7D%7D%7D%2C40e%5E%7B%280.15-0.01-%5Cfrac%7B1%7D%7B2%7D0.3%5E2%29%5Cfrac%7B1%7D%7B3%7D+%2B+%281.96%290.3%5Csqrt%7B%5Cfrac%7B1%7D%7B3%7D%7D%7D%5Cright%5D&bg=ffffff&fg=333333&s=0&c=20201002)

Which corresponds to the price interval of

![\left[29.40,57.98\right]](https://s0.wp.com/latex.php?latex=%5Cleft%5B29.40%2C57.98%5Cright%5D&bg=ffffff&fg=333333&s=0&c=20201002)

Probabilities: Probability calculations on stock prices require a bit more mental gymnastics.

Conditional Expected Value: Define

Then

![\begin{array}{rll} \displaystyle E\left[S_t|S_t<K\right] &=& S_0e^{(\alpha - \delta)t}\frac{\mathcal{N}(-d_1)}{\mathcal{N}(-d_2)} \\ \\ \displaystyle E\left[S_t|S_t>K\right] &=& S_0e^{(\alpha - \delta)t}\frac{\mathcal{N}(d_1)}{\mathcal{N}(d_2)} \end{array}](https://s0.wp.com/latex.php?latex=%5Cbegin%7Barray%7D%7Brll%7D+%5Cdisplaystyle+E%5Cleft%5BS_t%7CS_t%3CK%5Cright%5D+%26%3D%26+S_0e%5E%7B%28%5Calpha+-+%5Cdelta%29t%7D%5Cfrac%7B%5Cmathcal%7BN%7D%28-d_1%29%7D%7B%5Cmathcal%7BN%7D%28-d_2%29%7D+%5C%5C+%5C%5C+%5Cdisplaystyle+E%5Cleft%5BS_t%7CS_t%3EK%5Cright%5D+%26%3D%26+S_0e%5E%7B%28%5Calpha+-+%5Cdelta%29t%7D%5Cfrac%7B%5Cmathcal%7BN%7D%28d_1%29%7D%7B%5Cmathcal%7BN%7D%28d_2%29%7D+%5Cend%7Barray%7D&bg=ffffff&fg=333333&s=0&c=20201002)

This gives the expected stock price at time  given that it is less than

given that it is less than  or greater than

or greater than  respectively.

respectively.

Black-Scholes formula: A call option  on stock

on stock  has value

has value  at time

at time  . The option pays out if

. The option pays out if  . So the value of this option at time 0 is the probability that it pays out at time

. So the value of this option at time 0 is the probability that it pays out at time  , discounted by the risk free interest rate

, discounted by the risk free interest rate  , and multiplied by the expected value of

, and multiplied by the expected value of  given that

given that  . In other words,

. In other words,

![\begin{array}{rll} \displaystyle C_0 &=& e^{-rt}Pr\left(S_t>K\right)E\left[S_t-K|S_t>K\right] \\ \\ &=& e^{-rt}\mathcal{N}(d_2)\left(E\left[S_t|S_t>K\right] - E\left[K|S_t>K\right]\right) \\ \\ &=& e^{-rt}\mathcal{N}(d_2)\left(S_0e^{(\alpha - \delta)t}\frac{\mathcal{N}(d_1)}{\mathcal{N}(d_2)} - K\right) \end{array}](https://s0.wp.com/latex.php?latex=%5Cbegin%7Barray%7D%7Brll%7D+%5Cdisplaystyle+C_0+%26%3D%26+e%5E%7B-rt%7DPr%5Cleft%28S_t%3EK%5Cright%29E%5Cleft%5BS_t-K%7CS_t%3EK%5Cright%5D+%5C%5C+%5C%5C+%26%3D%26+e%5E%7B-rt%7D%5Cmathcal%7BN%7D%28d_2%29%5Cleft%28E%5Cleft%5BS_t%7CS_t%3EK%5Cright%5D+-+E%5Cleft%5BK%7CS_t%3EK%5Cright%5D%5Cright%29+%5C%5C+%5C%5C+%26%3D%26+e%5E%7B-rt%7D%5Cmathcal%7BN%7D%28d_2%29%5Cleft%28S_0e%5E%7B%28%5Calpha+-+%5Cdelta%29t%7D%5Cfrac%7B%5Cmathcal%7BN%7D%28d_1%29%7D%7B%5Cmathcal%7BN%7D%28d_2%29%7D+-+K%5Cright%29+%5Cend%7Barray%7D&bg=ffffff&fg=333333&s=0&c=20201002)

Black-Scholes makes the additional assumption that all investors are risk neutral. This means assets do not pay a risk premium for being more risky. Long story short,  so

so  . So in the Black-Scholes formula:

. So in the Black-Scholes formula:

Continuing our derivation of  but replacing

but replacing  with

with  ,

,

For a put option  with payout

with payout  for

for  and 0 otherwise,

and 0 otherwise,

These are the famous Black-Scholes formulas for option pricing. When derived on the back of a cocktail napkin, they are indispensable for impressing the ladies at your local bar. :p

. No refund is paid if losses exceed $100. The refund amount

can be expressed as